11.11.2024

State of Development in Crypto: Is the Job Finished?

The crypto space has been building like crazy, but let's keep it real - we're still dealing with some major technical hurdles that even the biggest brains in crypto haven't fully cracked yet. And we are not talking about "number go up" but rather the fundamental elements that could make or break mainstream adoption.

From L2s that have still not reached their full potential, to cumbersome and still not fully secure or trustless cross-chain experience—the tech challenges still are real.

Ever tried explaining to your non-crypto friends why they need triple-check a wallet address, the target network, and why they need separate Ether for gas on L2 if they have it on L1? Yeah, not fun.

In this article, provided by our diligent researcher @admnov_, we'll dive into the details of what job is still to be finished: the scalability and fragmentation, the security holes that can cost us millions, why moving assets between chains still feels like you're performing brain surgery and when can you run an #Ethereum validator on your smart watch. Plus, why having 50 different L2s might not be as great as we think it is. Let’s not sugarcoat it—these are complex problems that even top researchers are still scratching their heads over. But understanding them is key to knowing where we're headed. Let's dive in...

Even though we've seen significant development since the last cycle in the #Blockchain and #Crypto space, the job isn't finished. ETH L2s are now very cheap thanks to Proto-Danksharding and blobs, but that's in our current environment with relatively constant demand. Most people who use crypto and transact on-chain are the same ones who used it during the bear market. We're still yet to experience the vertical influx of new users like we saw in 2021, when people were paying hundreds of dollars in gas to front-run #NFT purchases.

That will be the true test of the new technology all the brilliant minds in the space have been working on over the past few years. In our recent article, we covered various L2s and alternative L1s boasting high transactions-per-second numbers and novel approaches—check it out if you're interested in the state of current scaling solutions. However, speed alone isn't enough.

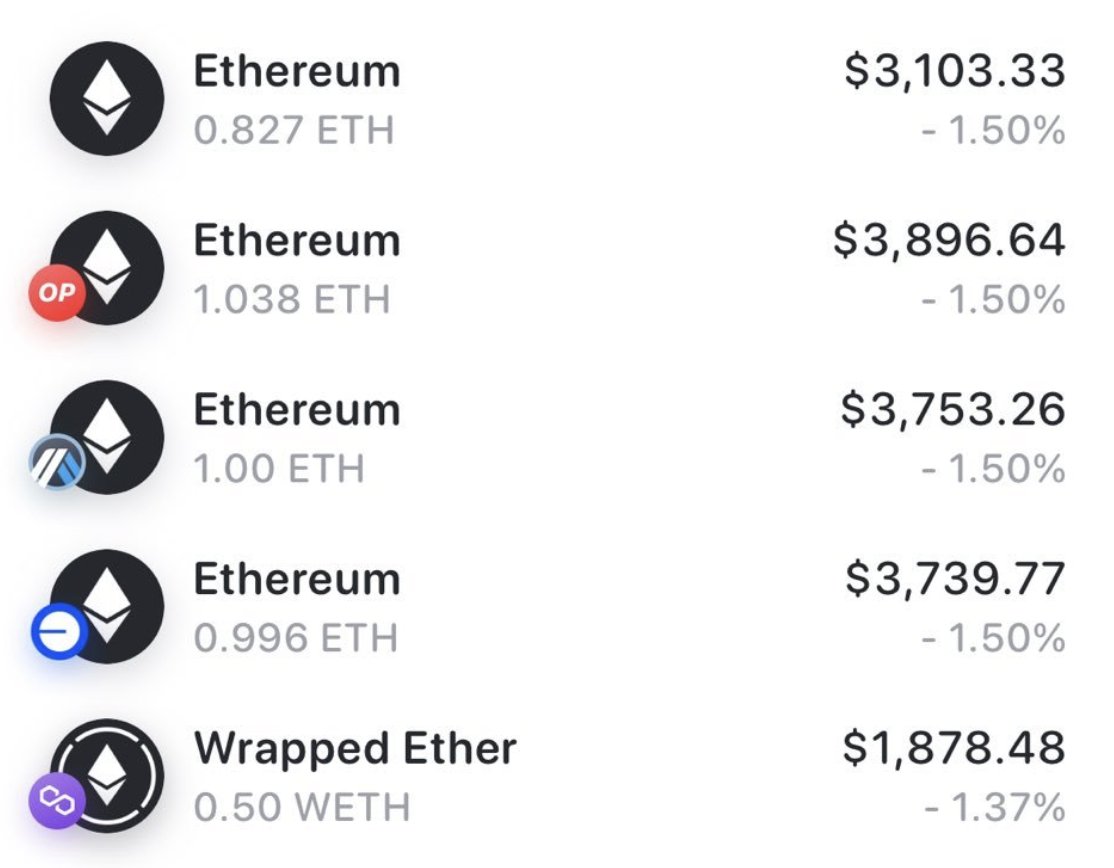

We still face unpredictable and high gas fees, and now we need to monitor blob gas prices too. It's a new market for blobs on Ethereum, and when demand exceeds the target, prices surge. L2s have made significant progress, most notably @Optimism with the OP Stack that many projects are building upon. Still, users often criticize the fragmentation of liquidity across L2s and the suboptimal experience of managing assets across multiple layers.

Another crucial topic is the ever-evolving ZK rollups and their underlying complex computations that enable unique and private use cases. Zero-Knowledge Proofs remain computationally expensive, with many teams working to optimize both proof generation and verification through proof compression techniques and transaction bundling.

The ZK proving infrastructure would benefit from specialized hardware and dedicated proving networks, allowing applications to focus more on their use cases while outsourcing proof verification to a decentralized network.

While zkEVM development is flourishing, we're far from done, especially regarding the more equivalent implementations. Moreover, for Ethereum to approach its goals, we need real-time proven zkEVMs. ZKPs are particularly crucial for privacy and integrity-preserving data sharing mechanisms, digital identities, and voting systems. On the horizon, we're seeing emerging research pushing ZKPs towards quantum resistance and multiparty proving.

One of the most anticipated UX improvements has been Account Abstraction. It was introduced through the ERC4337, simply put, it replaces Externally Owned Addresses with smart contract wallets. It provides enhanced security features like multi-signatures, social recovery, whitelisted addresses, and other programmability.

It also offers improved user experience by allowing gas fees to be paid in tokens other than Ether, enabling transaction bundling, and facilitating mobile app integration to bring dApps to mobile users.

However, currently, most of these features are quite complex and incur higher gas fees, often require a network of bundlers or relayers, lack proper dApp integration, need standardization across networks, may overwhelm newcomers with complexity, and could introduce new attack vectors.

Oracles have been crucial not just for DeFi, but for connecting blockchains to real-world information, cross-chain data, and most commonly, asset prices. @chainlink, the pioneer among oracles and widely considered a blue chip in the space, offers low-latency data feeds for protocols like @GMX_IO, introduced Economics 2.0 to reward LINK token stakers, and enables bringing off-chain API data onto the blockchain.

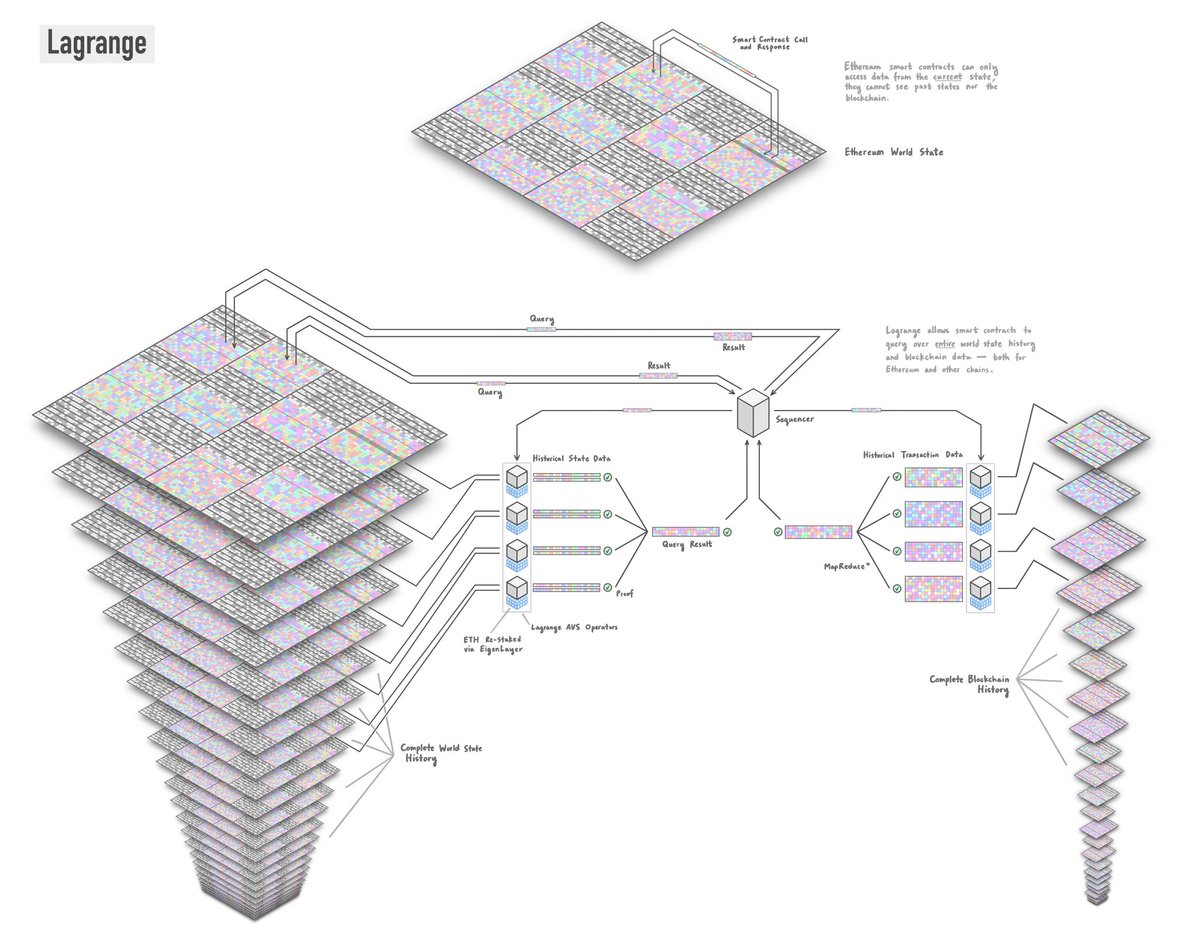

While @PythNetwork oracle is emerging as a Chainlink competitor, oracles still face one major limitation: on-chain history. Enter ZK coprocessors, notably @lagrangedev, which pre-processes blockchain data in a parallelized and distributed way into a prover-friendly verifiable database that can be queried like SQL inside a smart contract. This innovation could be huge, as contract execution currently only has transactional and block context, meaning historical data access is limited. Imagine the possibilities: easily tracking lifetime gas spending, calculating credit scores based on wallet history, or verifying token holdings at specific past block heights—even across chains. This could add a whole new dimension to smart contract execution: the dimension of time.

Many decentralized applications have recently started exploring appchains, with several prominent ones announcing their own dedicated chains. Notably, @Uniswap will be launching their OP Stack-based UniChain to increase transaction throughput, speed up finality, reduce costs by up to 95%, and minimize MEV.

Speaking of Maximum-Extractable-Value (MEV), it has often been viewed as problematic. The current model has block proposers accepting the highest bid from block builders, who run sophisticated algorithms to order transactions for their financial benefit. Block builders ultimately decide which transactions are included and in what order. This raises concerns about censorship and market manipulation, especially since roughly 88% of Ethereum blocks are produced by just two actors.

Numerous research papers have focused on various MEV mitigation strategies; Vitalik's recent blog highlights approaches like inclusion lists (where proposers create a list of transactions that block builders can only add to and reorder) and BRAID (featuring multiple parallel proposers creating their lists and deterministically ordering). Both approaches require encrypted mempools, which remain a challenge to implement.

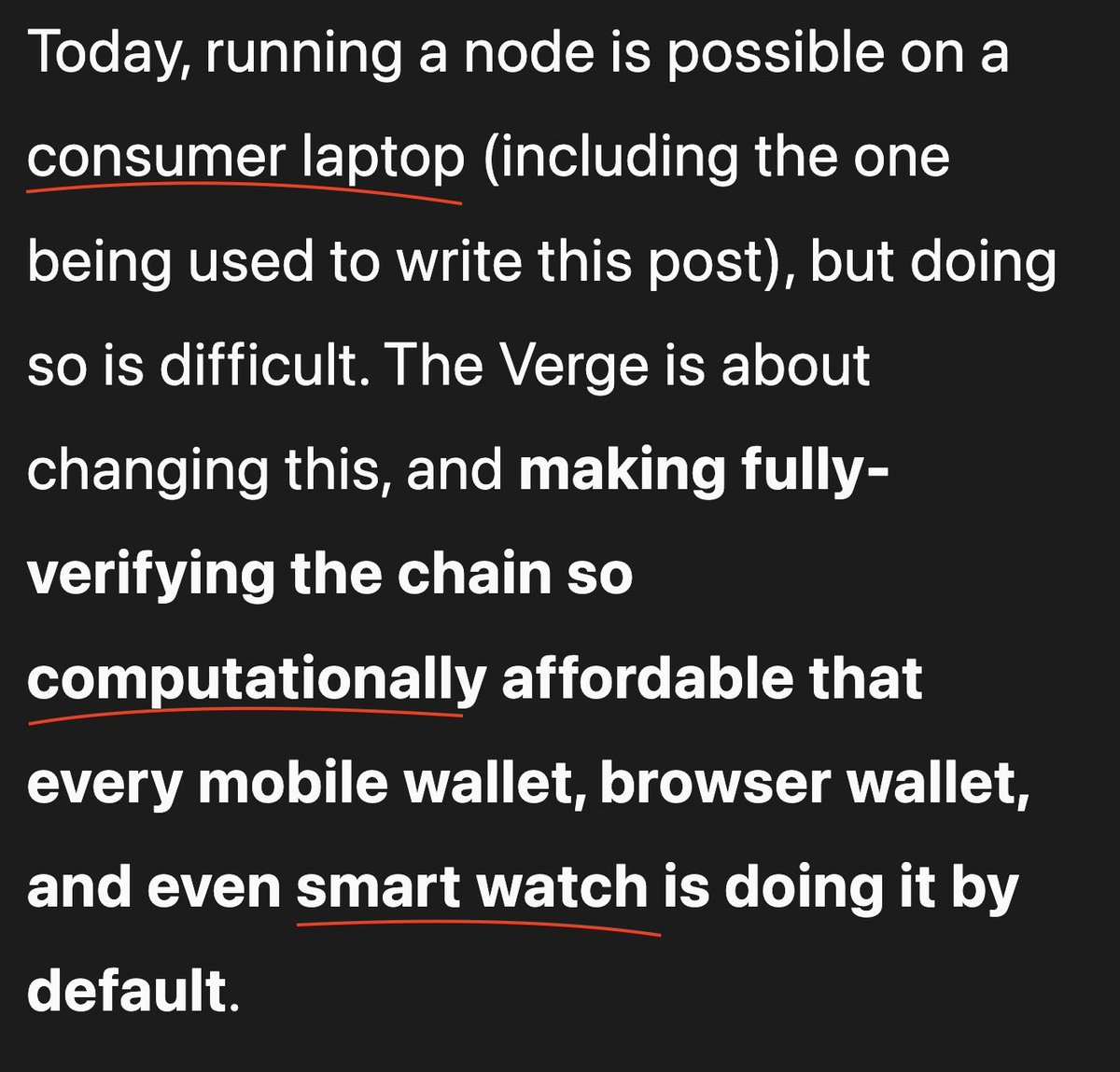

Regarding Ethereum validators, the community has been pursuing multiple goals: achieving single-slot finality, reducing the minimum stake requirement from 32 ETH, and lowering node operation requirements. These goals are challenging to achieve while maintaining economic finality, meaning that reverting finalized blocks requires burning substantial ETH.

Approaches to single-slot finality include randomly selecting medium-sized committees and introducing lower-stake tiers with limited responsibilities. The vision of enabling anyone to run a validator depends on stateless validation—the ability to verify blocks without maintaining the entire blockchain state.

This requires changing Ethereum's state tree from the current Merkle Patricia Tree to either Verkle Trees (fast but not quantum resistant) or Binary Trees with STARK proofs (quantum resistant but with longer proving times).

@StarkWareLtd has improved proving times using Poseidon hashes, though these remain immature and require further testing.

@VitalikButerin emphasizes that reducing history storage is even more crucial than statelessness. EIP-4444 aims to reduce history requirements to one year, necessitating a distributed solution for chain history storage. These represent just a few aspects of Ethereum's roadmap—for a more comprehensive explanation, refer to Vitalik's blog.

Finally, let's examine decentralized data challenges. While not directly blockchain technology, this area needs improvement, especially with the rise of decentralized social networks.

Unlike centralized services that handle storage, indexing, data serving, and moderation compliance, decentralized services like @Filecoin can't provide these features out of the box. You can't use Google to access IPFS-hosted files without an intermediary.

Projects like @GlitterProtocol are making content discovery easier by enabling simple searches instead of requiring specific Content-Identifier hashes. However, content filtration in decentralized systems presents philosophical challenges. Moderation is a double-edged sword: censorship fundamentally opposes decentralization, but unrestricted content access (including NSFW, spam, phishing) isn't viable. The key question becomes who decides what content is acceptable and how.

For instance, IPFS HTTP gateways often filter flagged content, though native access to the IPFS protocol bypasses these restrictions.

Alternatively, DAOs could approve safe content with verification stamps, limiting searches to approved results. However, aligning moderation with decentralization principles requires further development.

As you can see, the blockchain technology is still figuring out its growing pains, from scaling and security to making everything work together smoothly. While we've made massive strides with L2s, ZK proofs, and smart contract wallets, there's still plenty of exciting work ahead.

These aren't just technical challenges though—they're the foundation of what could make or break mainstream adoption. The solutions might take time, but with some of the brightest minds in the space working on these problems, the future looks promising.

Keep building, keep questioning, and stay curious—the best innovations often come from the most challenging problems.